One of the key aspects for replication is checkpointing. A target database checkpoint tells which transactions are actually committed and which not yet. This is a key aspect of transactional replication. Let’s look how that works for the Oracle GoldenGate Classic Replicat.

Disclaimer: This article is just about Classic Replicat and single thread. It is just an introduction to a bigger topic. I have read this text after writing and I’ve noticed that all this is very obvious, but it may be an introduction for somebody who is new in this subject.

For Integrated Replicat and other parallel replication techniques the picture is a bit more complicated and will be covered later.

1. Source database (Redo)

No matter what how the transactions are interleaved on the source database what is most important for replication is the order of the COMMIT operations.

It might be strange in the beginning but it does not matter what is the actual SCN (or timestamp) of the operations in the source database. What matters is the time stamp (and the order, or SCN) of the commit operation. The commit defines the order of the transactions that appeared in the source database.

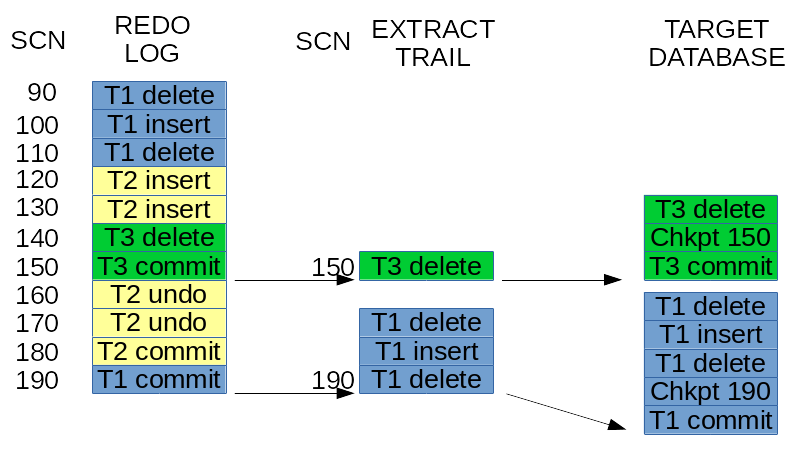

On the image above there were 3 transactions:

- T1 has some DML operations and a commit at SCN 190,

- T2 has some DML operations and a rollback which caused some UNDO operations to revert the changes – and also a commit at the end,

- T3 has some DML operations and a commit at SCN 150.

Yes indeed – rollback operation causes a commit to appear in the redo log.

2. The trail

According to COMMIT sequence the first transaction that will be replicated is T3 – at SCN 150. Then T1 – at SCN 190. T2 since it was rolled back would not appear in the trail files. The Extract process will automatically ignore it, as it never happened. It has no importance for replication. It might just have never happened and the effect would be the same.

The OGG trail will only contain: T3 transaction and T1 transaction. OGG does not write a special commit record in the trail. All DML operations that appear in the trail have special signatures (TransInd) which describes the context of this DML: the beginning (0), the middle (1) or end (2) of transaction or single DML operation transaction (3).

Everything what is in Trail files consist of committed pure transactions. No rolled back transactions are here. It is also important, to note, that transactions are not being interleaved in the trail files. They appear in the exact commit order.

What we know at this point? No matter what was the interleaving of particular DML’s in the source database, to replicate the transactions to the target database they must be executed in the exact order of the commit sequence – according to the SCN’s of the commit operations in the source database.

3. Trail checkpointing

The architecture of trails shows that there is no additional checkpointing in the trail itself. Every trail file does not have an additional second file with the checkpoint. There is just a checkpoint file (in dirchk folder) attached to the process that shows the information what is the latest CSN in the trail, but the trail itself is just a queue.

If anything goes wrong, then the process (Extract or Replicat) might go some time back in the source and get missing information that was lost and is partially written to the trail.

4. Target database checkpointing

Just looking at the architecture reveals an important thing: just committing sole transactions without checkointing information to the target database is not enough. The checkpoint table in the target database is crucial. In most possible cases you need it anyway.

There might be situations where it would be very difficult to tell what is the exact position of the Replicat process. Which transactions are committed and which are not. In case of a complex replication (like using INSERTALLRECORDS) it is not possible to reveal the information what is committed and what not. And you can’t just use HANDLECOLLITIONS to somehow manage that.

For example:

- The Replicat process was restarted and did hot have enough time to write information about the committed transaction in the checkpoint file, but the transaction in the database got committed. It is virtually not possible to update the OGG checkpoint file at the exact same time as the database makes the commit. In case of failure you might get out of sync;

- The target database has been recovered from backup to an earlier timestamp (committed SCN from source). Suddenly you have earlier transactions committed in the target database and you might want to “go back in time” with the replication (using ALTER REPLICAT ATSCN);

- The OGG checkpoint file has been corrupted and the Replicat needs to be recovered (restore files from backup with an earlier version of file checkpoint),

In those situations (and maybe also in some other disaster scenarios) without the checkpoint table in the target database the Replicat process can never be sure which exact transaction were already replicated and which not.

5. Checkpoint table

A checkpoint table comes to the rescue. There is one row in the checkpoint table per one Replicat. This row in column tells the SCN (named here by OGG as CSN) of last committed transaction. This information is stored in column LOG_CSN (or LOG_CMPLT_CSN). Actually for one thread those columns should be equal. This table is managed very easily: the row in checkpoint table is updated together with every one replicated transaction (or group of transactions). A special UPDATE command is executed at the end of the transaction.

This way the internal transaction mechanism inside the database will keep up to date information about what is exactly replicated and what not yet. After any of the scenarios (mentioned above) it is possible to exactly position the Replicat in the exact place where it finished earlier. Even all trails and checkpoints could become corrupted an recreated (from Redo and Archive logs) and still it is possible to fully recover the replication without any loss. You might even have to setup the replication from scratch. All you might need is the redo/archive logs from the source database for the missing period.

Pros:

- A fool-proof approach available in OGG Classic Replicat for all databases architectures (Oracle, SQL Server, DB2, Sybase, MySQL, etc),

- This idea is very simple and covers many disaster scenarios,

- Works also with batching – the checkpoint record can be assigned to many combined-in-one transactions,

- Works also with not synchronized (no wait) commit – it does not influence the atomicity of the transactions,

- No risk of transaction duplication in worst-case scenario.

Cons:

- In this simple approach works only in single threaded replication – for multi-threaded replication the trick with one CSN (SCN) position and one row per Replicat does not work,

- Performance impact – requires one additional UPDATE operation per every replicated transaction (or grouped transaction),

- Polluting the target database with one extra table – the solution requires adding additional the target database.

Not using checkpoint table together with Classic Replicat might be only used for test environment. Everybody should use checkpoint table where possible.

This is just the simplest case. In the next article we’ll look at more complex situations – when parallel replication is being used.

Pingback: Oracle GoldenGate Replicat Checkpoint table – Bersler